Unlike other decoders I've read about (or written), this one is neither based

on simple pulse lengths (PulseIn) nor does it have a "start of second

acquisition" phase separate from the "receive & decode a full minute" phase.

Instead, the 'start of second' is continuously tracked by statistics over the

last 30-60 seconds of data, and then at the end of each second a symbol is

decoded.

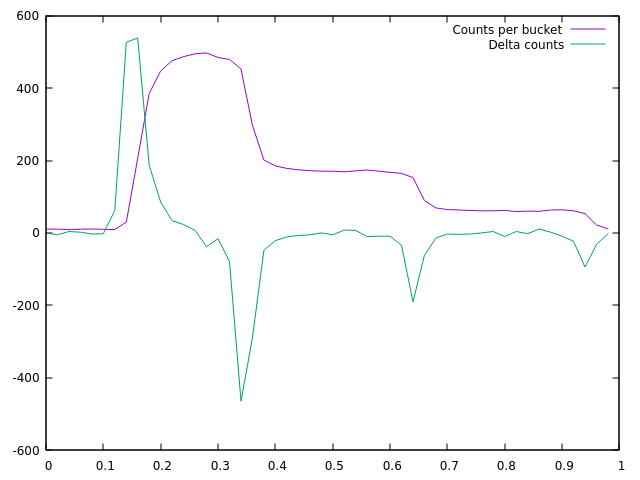

The start of the second is the sample where the discrete derivative of the signal strength is greatest, at an offset of 0.16 here (based on a rather noisy set of input data):

Because the statistic is continuously (but efficiently) tracked, it doesn't matter if the local sampling clock has an error relative to WWVB. This just causes the offset to slowly shift, but doesn't affect decoding.

It's targeted at Cortex M microcontrollers, though it might fit on smaller micros like those on the classic Arduino. So far, I've only run it against logs from the WWVB Observatory, but it far outperforms my existing CircuitPython WWVB decoder (source code not online)---In an hour where my existing clock (using a PulseIn-like strategy) recieved 0 minutes successfully due to storms in the area, the new algorithm decoded 39 out of 59 minutes.

The C++ code is called CWWVB and it is up on github! It's not fully commented, but

it does explain things more closely that this blog post does. My fresh receiver

modules aren't coming before the end of the month, but I'm thinking of doing a

very simple display, such as just showing MM:SS on a 4-digit 8-segment display,

with an Adafruit Feather M4 for the microcontroller.

Entry first conceived on 25 October 2021, 2:19 UTC, last modified on 25 October 2021, 2:49 UTC

Website Copyright © 2004-2024 Jeff Epler