"interlz5" in Python -- Apple II text adventure adventures

On the socials, someone asked whether anyone knew where the source for a tool called "interlz5" lived; they had found a binary but not the original (presumably C or C++) source. (Update: S. V. Nickolas's source code was right in front of us this whole time, a nested zip inside apple_ii_inform_demo_files.zip and now attached here as well)

This tool is described in An Apple II Build Chain for Inform by Michael Sternberg.

Depending on the game, it is stored as 1 or 2 sides of a disk. The first side consists of the interpreter (16KiB) followed by up to 98.5KiB of the story. The second side consists of the remaining story, up to 157KiB.

Since I could run the original "interlz5" tool, I was able to confirm what it did: It copies the interpreter binary as-is, then re-arranges the first part of the story according to an "interleave" rule. If the game is a small one, that's all and you're done! (well, you need to save it as a ".do" file or your emulator may perform a second interleaving step on the data!)

Now, are you ready for the surprise? As Sternberg wrote, "If the story file is larger than 100,864 bytes, the remainder of the Z-code is stored on a second 18-sector disk image." interlz5 writes this as a "nib" format file with no header.

Why 100,864 bytes aka 98.5KiB? This appears to be how much can be loaded into the RAM of a 128KiB Apple IIe while leaving room for the interpreter & other required structures. Why is the special format only used on "side b"? Since there is already always spare space on "side a", no special encoding was needed. However, one does wonder whether the initial load time was better with the interleaved 16 sector tracks compared to if they had used the "18-sector" format.

Oh, but what exactly is the format? Sternberg's document doesn't contain any detail, and at the time I didn't have S. V. Nickolas's interlz5 source to refer to.

I'm aware that 18-sector tracks were used by some other games (The term RWTS18 comes up) but there seem to be multiple different forms. In the case of these Z5 disks, each track is actually one big sector containing 4608 bytes of useful data encoded like so:

- The special sequence "d5 aa ad"

- The 0-based track number encoded as two bytes of gcr4

- 18 groups of 343 "gcr6" nibbles, organized just like a 256-byte sector

- Padding with "ff" flux patterns to the end of the track

The main reason that more data can be stored is because the extra space between sectors is removed. This lets 18×256 bytes be stored instead of just 16×256 bytes. This is inconvenient if the disk were to be written, because you'd have to rewrite the whole track. But in normal use, the game disk is read-only.

The groups of 343 "gcr6" nibbles all decode to 256 bytes exactly as the standard disk encoding, with the first 86 bytes encoding the two least significant bits of each byte and the remaining 256 bytes encoding the high 6 bits. Just like normal sector encoding, there's a byte sometimes called the "checksum" that is initialized to 0 and xor'd with the outgoing value before the gcr table lookup. The last gcr6 nibble is the final checksum value. This checksum is reset to 0 at each 256-byte boundary.

My program produces nearly the same output as interlz5, except for differences that I think stem from use of undefined data in the original compiled version. This means my files are not bit-for-bit identical. There are two specific causes:

- If the input z5 file does not exactly fill a track, interlz5 appears to fill with uninitialized value; I fill with zeros

- Two bits in each "twobit" area are unused. interlz5 appears to use a value from the first byte of the next sector, or an uninitialized byte at the end of data. I use the next byte if one exists, otherwise zero.

Due to the xor/checksum feature, any difference in data being encoded actually affects a subsequent gcr byte as well.

Compatibility? I had success with a specific pair of files:

9bec6046eca15a720a40e56522fef7624124b54e871b0a31ff9d5f754155ef00 interp.bin 6179b5d5b17d692ec83fe64101ff8e4092390166c2b05063e7310eb976b93ea0 Advent.z5With files output by either tool, I could successfully boot the game in AppleWin and go NORTH into the forest.

Sadly, I did not have luck with Hitchhiker's Guide or Beyond Zork ".z5" files I obtained from the internet, with either tool.

interl5.py is licensed GPL-3.0 and is tested with Python 3.13. It requires no packages outside the Python standard library. I have no plans to further develop it.

Files currently attached to this page:

| Advent.z5 | 135.0kB |

| apple_ii_inform2.pdf | 763.3kB |

| interl5.py | 3.5kB |

| interlz5-001.zip | 33.9kB |

| interp.bin | 16.0kB |

Junk drawer & embedding

I have been working on leaving github.

One thing I liked from github was gist, including command-line upload and the ability to embed it. I want to replace this but with codeberg. And, I think I've gotten close. More polish wouldn't hurt, but ehhh...

First, here's the script for uploading:

And here's an example of embedding:

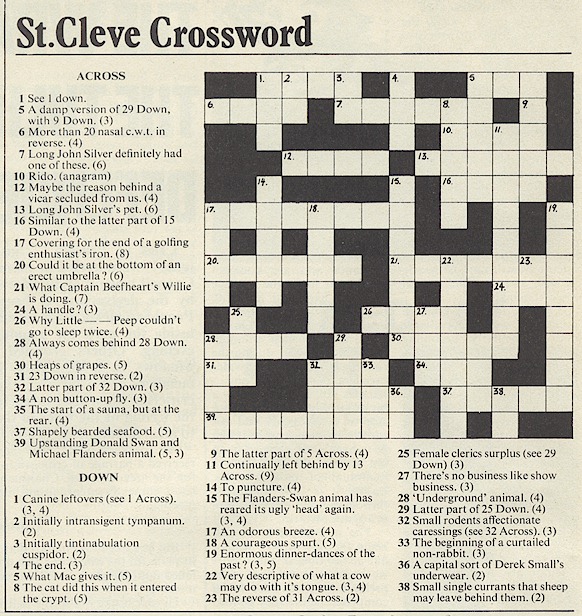

Thick As A Brick / St Cleve Crossword solution

Has the crossword from the fake newspaper in the Jethro Tull album Thick as a Brick, titled St Cleve Crossword or Saint Cleve Crossword, ever been solved? Is it actually a UK style cryptic? Or is it just nonsense?

This is from a copy of the album booklet that I found at world-enlightenment.com copied here for posterity.

Migrating from github to codeberg: existing readthedocs projects

Recently, I made the decision to migrate select projects of mine from github to codeberg.

Today I started migrating wwvbpy, which has an existing readthedocs configuration. In readthedocs "edit project" page, the "repository URL" field was greyed out and uneditable.

It took me a few minutes but I eventually realized that before I could set the project URL I had to clear the "connected repository" and save the settings.

After that, I was able to manually edit the URL and then manually configure the outgoing webhooks, so that pushes to codeberg would trigger doc builds.

Variations on 'if TYPE_CHECKING'

Suggested by mypy documentation:

from typing import TYPE_CHECKING

if TYPE_CHECKING:

from typing import …

Works in mypy, pyright, pyrefly. Found in setuptool_scm generated __version__.py:

TYPE_CHECKING = False

if TYPE_CHECKING:

from typing import …

Works in mypy, pyright, pyrefly. Best variant for CircuitPython?

def const(x): return x

TYPE_CHECKING = const(0)

if TYPE_CHECKING:

from typing import …

Works in mypy only. Does not work in pyright, pyrefly:

if False:

from typing import …

Dear Julian

Julian,

I just found the message you wrote in 2018.

I miss you and I wish you were in my life.

That is all, that's the message.

Mutual Tail Recursion in Python, fully mypy-strict type checked

As one does, I was thinking about how Python is criticized for lacking tail recursion optimization.

I came up with an idea of how to implement this without new language features, by using a decorator around the tail recursive function that catches a special Recur exception and then turns around and calls the same function with the new arguments:

class Recur(BaseException, Generic[P]):

args: P.args

kwargs: P.kwargs

def __init__(self, *args: P.args, **kwargs: P.kwargs):

super().__init__(*args)

self.kwargs = kwargs

def recurrent(f: Callable[P, T]) -> Callable[P, T]:

@functools.wraps(f)

def wrapper(*args: P.args, **kwargs: P.kwargs) -> T:

while True:

try:

return f(*args, **kwargs)

except Recur as r:

args = r.args

kwargs = r.kwargs

return wrapper

It can be used like so:

@recurrent

def gcd(a: int, b: int) -> int:

print(f"gcd({a}, {b})")

if b == 0:

return a

raise Recur(b, a % b)

print(gcd(1071, 462))

This is not an original idea. It has been documented before e.g., by Chris Penner. This code all properly type-checks under mypy --strict (some context not shown). However, it doesn't allow mutual tail recursion.

I'll be honest: I didn't find any well-motivated examples for mutual tail recursion! Everyone uses the same awful poorly-motivated example of is-odd and is-even. But, because it was a challenge to placate the mypy type checker, I wanted to implement it anyway.

The problem lies in the implementation of the wrapper function: args and kwargs have the types given in the initial recurrent call, and the types can't change just because f changes.

The solution, which I realized a few weeks later, was to move the responsibility to actually dispatch the recurrent call into the Recur instance. There can be many Recur instances, but there inside the wrapper function they all simply have the same type: Recur!

Here's the full implementation, which type checks clean with mypy --strict (1.11.2) and runs in python 3.11.2:

from __future__ import annotations

import functools

from typing import Callable, ParamSpec, TypeVar, Generic, NoReturn

P = ParamSpec("P")

T = TypeVar("T")

class Recur(BaseException, Generic[P, T]):

f: Callable[P, T]

args: P.args

kwargs: P.kwargs

def __init__(self, f: Callable[P, T], args: P.args, kwargs: P.kwargs):

super().__init__()

self.f = f.f if isinstance(f, Recurrent) else f

self.args = args

self.kwargs = kwargs

def __call__(self) -> T:

return self.f(*self.args, **self.kwargs)

def __repr__(self) -> str:

if self.kwargs:

return f"<Recur {self.f.__name__}(*{self.args}, **{self.kwargs})>"

return f"<Recur {self.f.__name__}{self.args})>"

__str__ = __repr__

class Recurrent(Generic[P, T]):

f: Callable[P, T]

def __init__(self, f: Callable[P, T]) -> None:

self.f = f

def __call__(self, *args: P.args, **kwargs: P.kwargs) -> T:

r = Recur(self.f, args, kwargs)

while True:

try:

return r()

except Recur as exc:

r = exc

def recur(self, *args: P.args, **kwargs: P.kwargs) -> NoReturn:

raise Recur(self.f, args, kwargs)

def __repr__(self) -> str:

return f"<Recurrent {self.f.__name__}>"

And here's an example use:

import sys

from recur import Recurrent

@Recurrent

def gcd(a: int, b: int) -> int:

print(f"gcd({a}, {b})")

if b == 0:

return a

gcd.recur(b, a % b)

@Recurrent

def is_even(a: int) -> bool:

assert a >= 0

if a == 0:

return True

is_sum_odd.recur(a, -1)

@Recurrent

def is_sum_odd(a: int, b: int) -> bool:

c = a + b

assert c >= 0

if c == 0:

return False

is_even.recur(c - 1)

print(gcd)

print(gcd(1071, 462))

print(is_even(sys.getrecursionlimit() * 2))

print(is_even(sys.getrecursionlimit() * 2 + 1))

Enabling markdown

This blog is based on its own weird markup language.

Now in 2024 everyone's used to markdown instead.

So I made a markdown tag for this blog.

You won't notice much as a reader, but for me this is a big convenience.

One thing I noticed is that code rendered inside backticks looks a bit

different.

Talking directly to in-process tcl/tk

Vintage X Bitmap Font "VT100 graphics characters"

datetime in local time zone

Sunflower Butter aka Sun Butter or Sunbutter (plus Chocolate Sun Butter)

An efficient pair of polynomials for approximating sincos

Leaving my roles in LinuxCNC

Linux ThinkPad T16 Microphone "Muted" Indicator

Notes on using skyui with Skyrim Anniversary Edition from GOG

All older entries

Website Copyright © 2004-2024 Jeff Epler